Token-Level Truth: Real-Time Hallucination Detection for Production LLMs

· 阅读需 1 分钟

Your LLM just called a tool, received accurate data, and still got the answer wrong. Welcome to the world of extrinsic hallucination—where models confidently ignore the ground truth sitting right in front of them.

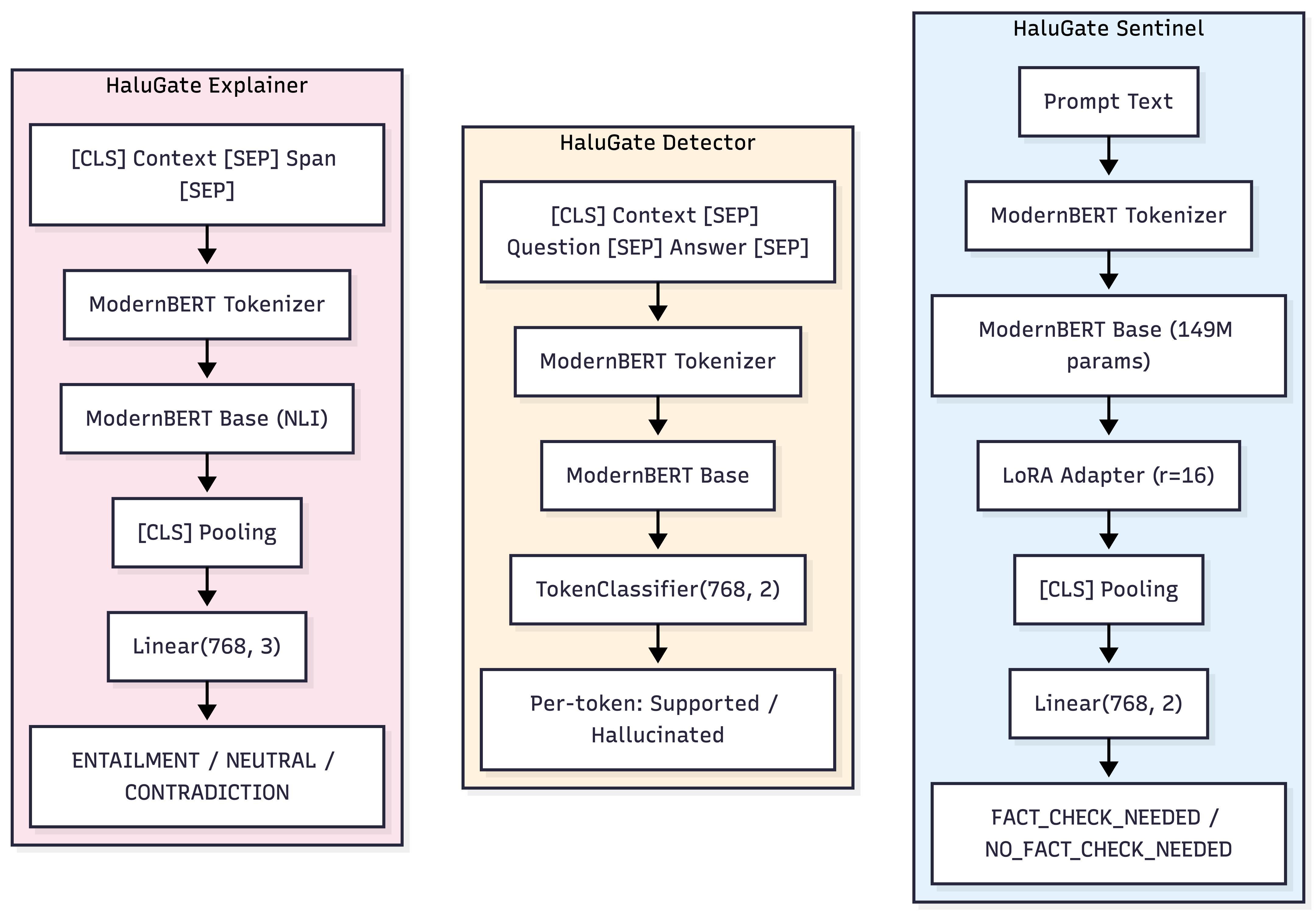

Building on our Signal-Decision Architecture, we introduce HaluGate—a conditional, token-level hallucination detection pipeline that catches unsupported claims before they reach your users. No LLM-as-judge. No Python runtime. Just fast, explainable verification at the point of delivery.

Synced from official vLLM Blog: Token-Level Truth: Real-Time Hallucination Detection for Production LLMs