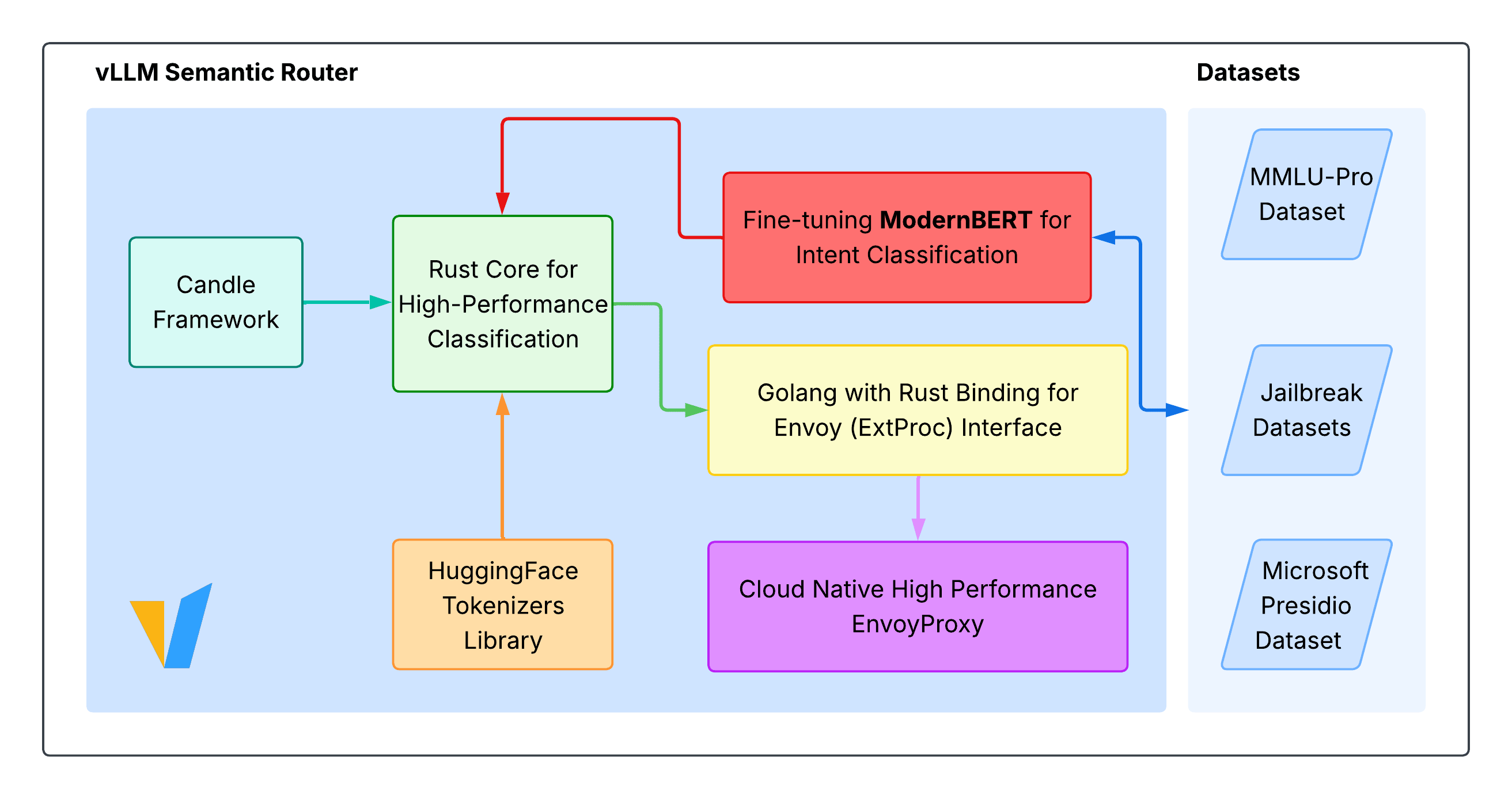

🧠 Neural Processing Architecture

Powered by cutting-edge AI technologies including Encoder Only Models, SLMs and LLMs, and advanced semantic understanding for intelligent model routing and selection.

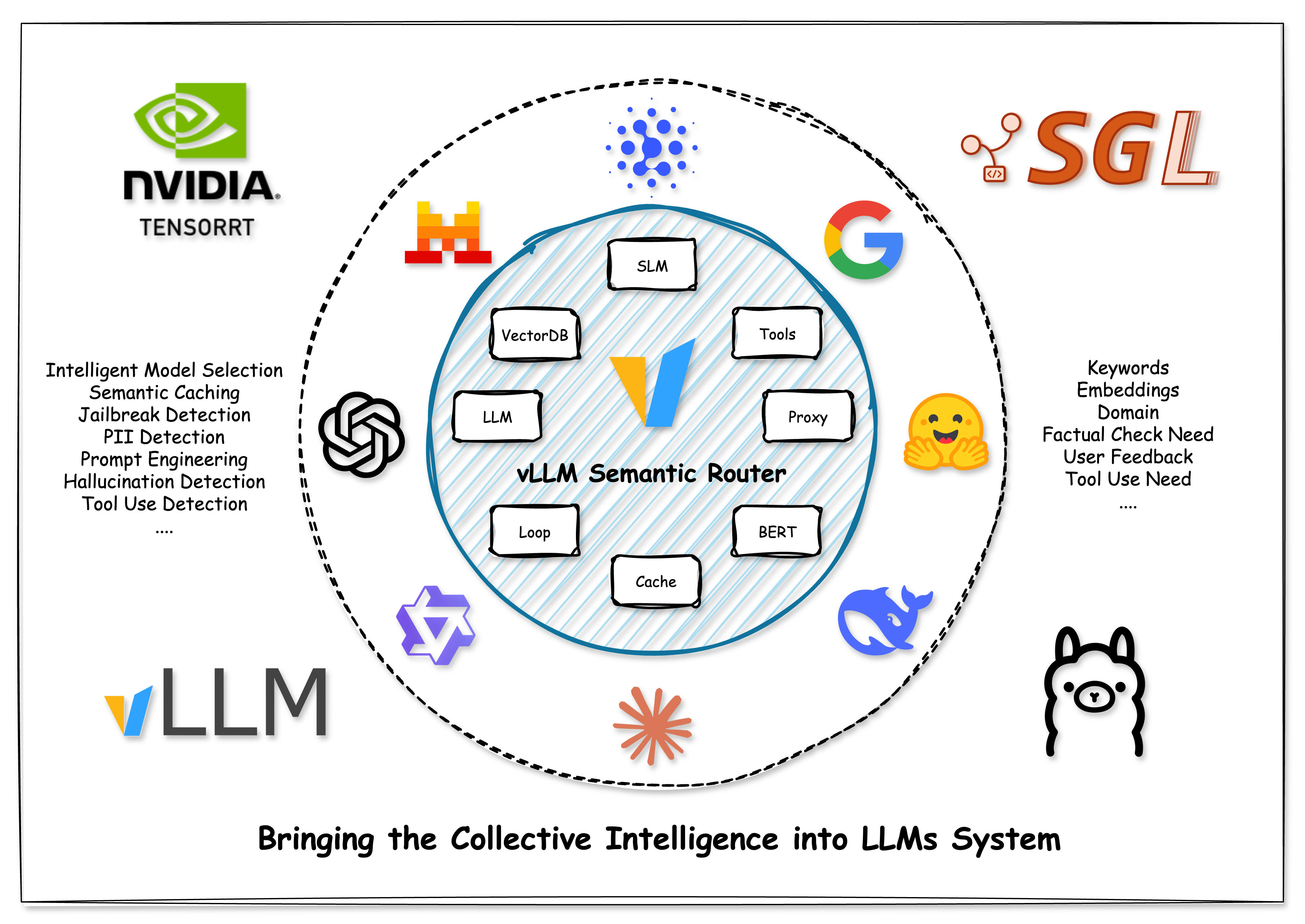

🏗️ Architecture

🎯 Our Goals

Building the System Level Intelligence for Mixture-of-Models (MoM), bringing Collective Intelligence into LLM systems

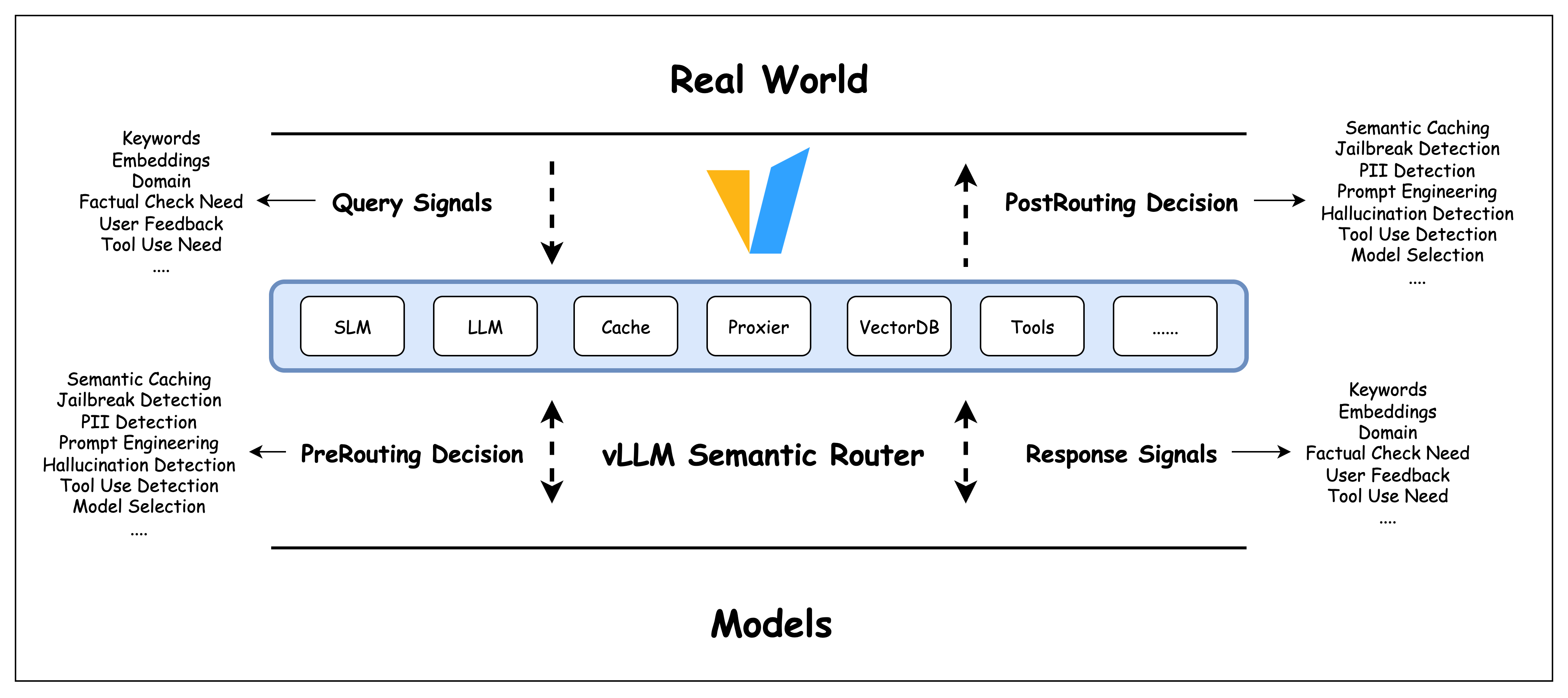

📍 Where it lives

It lives between the real world and models

👥 Meet Our Team

The amazing people behind vLLM Semantic Router

Maintainer

MaintainerHuamin Chen

Distinguished Engineer @Red Hat

Maintainer

MaintainerChen Wang

Senior Staff Research Scientist @IBM

Maintainer

MaintainerYue Zhu

Staff Research Scientist @IBM

Maintainer

MaintainerXunzhuo Liu

AI Networking @Tencent

Committer

CommitterSenan Zedan

R&D Manager @Red Hat

Committer

Committersamzong

AI Infrastructure / Cloud-Native PM @DaoCloud

Liav Weiss

Software Engineer @Red Hat

Asaad Balum

Senior Software Engineer @Red Hat

Yehudit

Software Engineer @Red Hat

Noa Limoy

Software Engineer @Red Hat

Committer

CommitterJaredforReal

Software Engineer @Z.ai

Srinivas A

Software Engineer @Yokogawa

carlory

Open Source Engineer @DaoCloud

Committer

CommitterYossi Ovadia

Senior Principal Engineer @Red Hat

Committer

CommitterJintao Zhang

Senior Software Engineer @Kong

Committer

Committeryuluo-yx

Individual Contributor

Committer

Committercryo-zd

Individual Contributor

Committer

CommitterOneZero-Y

Individual Contributor

Committer

Committeraeft

Individual Contributor

Maintainer

MaintainerHuamin Chen

Distinguished Engineer @Red Hat

Maintainer

MaintainerChen Wang

Senior Staff Research Scientist @IBM

Maintainer

MaintainerYue Zhu

Staff Research Scientist @IBM

Maintainer

MaintainerXunzhuo Liu

AI Networking @Tencent

Committer

CommitterSenan Zedan

R&D Manager @Red Hat

Committer

Committersamzong

AI Infrastructure / Cloud-Native PM @DaoCloud

Liav Weiss

Software Engineer @Red Hat

Asaad Balum

Senior Software Engineer @Red Hat

Yehudit

Software Engineer @Red Hat

Noa Limoy

Software Engineer @Red Hat

Committer

CommitterJaredforReal

Software Engineer @Z.ai

Srinivas A

Software Engineer @Yokogawa

carlory

Open Source Engineer @DaoCloud

Committer

CommitterYossi Ovadia

Senior Principal Engineer @Red Hat

Committer

CommitterJintao Zhang

Senior Software Engineer @Kong

Committer

Committeryuluo-yx

Individual Contributor

Committer

Committercryo-zd

Individual Contributor

Committer

CommitterOneZero-Y

Individual Contributor

Committer

Committeraeft

Individual Contributor

Maintainer

MaintainerHuamin Chen

Distinguished Engineer @Red Hat

Maintainer

MaintainerChen Wang

Senior Staff Research Scientist @IBM

Maintainer

MaintainerYue Zhu

Staff Research Scientist @IBM

Maintainer

MaintainerXunzhuo Liu

AI Networking @Tencent

Committer

CommitterSenan Zedan

R&D Manager @Red Hat

Committer

Committersamzong

AI Infrastructure / Cloud-Native PM @DaoCloud

Liav Weiss

Software Engineer @Red Hat

Asaad Balum

Senior Software Engineer @Red Hat

Yehudit

Software Engineer @Red Hat

Noa Limoy

Software Engineer @Red Hat

Committer

CommitterJaredforReal

Software Engineer @Z.ai

Srinivas A

Software Engineer @Yokogawa

carlory

Open Source Engineer @DaoCloud

Committer

CommitterYossi Ovadia

Senior Principal Engineer @Red Hat

Committer

CommitterJintao Zhang

Senior Software Engineer @Kong

Committer

Committeryuluo-yx

Individual Contributor

Committer

Committercryo-zd

Individual Contributor

Committer

CommitterOneZero-Y

Individual Contributor

Committer

Committeraeft

Individual Contributor

Acknowledgements

vLLM Semantic Router is born in open source and built on open source ❤️